Note

Go to the end to download the full example code.

Density-weighted Uncertainty Sampling#

Note

The generated animation can be found at the bottom of the page.

Google Colab Note: If the notebook fails to run after installing the

needed packages, try to restart the runtime (Ctrl + M) under

Runtime -> Restart session.

Notebook Dependencies

Uncomment the following cell to install all dependencies for this

tutorial.

# !pip install scikit-activeml

import numpy as np

from matplotlib import pyplot as plt, animation

from sklearn.datasets import make_blobs

from skactiveml.utils import MISSING_LABEL, labeled_indices, unlabeled_indices

from skactiveml.visualization import plot_utilities, plot_decision_boundary

from sklearn.linear_model import LogisticRegression

from sklearn.mixture import GaussianMixture

from skactiveml.classifier import SklearnClassifier

from skactiveml.pool import UncertaintySampling

random_state = np.random.RandomState(0)

# Build a dataset.

X, y_true = make_blobs(n_samples=200, n_features=2,

centers=[[0, 1], [-3, .5], [-1, -1], [2, 1], [1, -.5]],

cluster_std=.7, random_state=random_state)

y_true = y_true % 2

y = np.full(shape=y_true.shape, fill_value=MISSING_LABEL)

# Initialise the classifier.

clf = SklearnClassifier(LogisticRegression(), classes=np.unique(y_true))

# Initialise the query strategy.

qs = UncertaintySampling(method='least_confident', random_state=random_state)

gmm = GaussianMixture(init_params='kmeans', n_components=5)

gmm.fit(X)

density = np.exp(gmm.score_samples(X))

# Preparation for plotting.

fig, ax = plt.subplots()

feature_bound = [[min(X[:, 0]), min(X[:, 1])], [max(X[:, 0]), max(X[:, 1])]]

artists = []

# The active learning cycle:

n_cycles = 20

for c in range(n_cycles):

# Fit the classifier.

clf.fit(X, y)

# Get labeled instances.

X_labeled = X[labeled_indices(y)]

# Query the next instance/s.

query_idx = qs.query(X=X, y=y, clf=clf, utility_weight=density)

# Plot the labeled data.

coll_old = list(ax.collections)

title = ax.text(

0.5, 1.05, f"Decision boundary after acquring {c} labels",

size=plt.rcParams["axes.titlesize"], ha="center",

transform=ax.transAxes

)

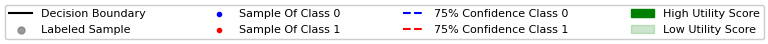

ax = plot_utilities(qs, X=X, y=y, clf=clf, utility_weight=density,

candidates=unlabeled_indices(y), replace_nan=None, res=25,

feature_bound=feature_bound, ax=ax)

ax.scatter(X[:, 0], X[:, 1], c=y_true, cmap="coolwarm", marker=".",

zorder=2)

ax.scatter(X_labeled[:, 0], X_labeled[:, 1], c="grey", alpha=.8,

marker=".", s=300)

ax = plot_decision_boundary(clf, feature_bound, ax=ax)

coll_new = list(ax.collections)

coll_new.append(title)

artists.append([x for x in coll_new if (x not in coll_old)])

# Label the queried instances.

y[query_idx] = y_true[query_idx]

ani = animation.ArtistAnimation(fig, artists, interval=1000, blit=True)

References:

The implementation of this strategy is based on Donmez et al.1 and Nguyen and Smeulders2.

Total running time of the script: (0 minutes 6.421 seconds)